A Hands-On Classroom Activity to Teach AI Bias

Picture a student asking ChatGPT a question for homework. What is more scary? Is it the fact that the students used AI or is it because the student gets to use the answer from AI without giving it a fraction of doubt.

We are racing to integrate AI tools into education, but we often skip a crucial foundational step: teaching students what AI actually is (and what it isn’t).

Before we teach them how to prompt, we must teach them how to question.

The most important lesson a student can learn about artificial intelligence isn't about coding or complex algorithms. It’s a simple, profound truth: AI is human-made, and it is only as good as the data we feed it.

If we want to cultivate true AI literacy—moving beyond just using tools to actually understanding them—we need to show students the cracks in the system. We need to show them bias.

Here is a simple, interactive classroom activity that uses image classification to demonstrate how easily an AI can be misled by biased data.

The Concept: AI as a Mirror, Not an Oracle

Before starting the activity, ground the students in the basics. You don't need deep technical knowledge here. You just need a metaphor.

Explain to your class that training an AI model is a lot like teaching a very young child what the world looks like by showing them flashcards.

If you only ever show a child flashcards of red apples, and then one day hand them a green apple, they might be confused. They might say, "That’s not an apple!" Why? Because their "knowledge" was biased toward red apples.

AI works exactly the same way. It doesn't magically "know" things; it finds patterns in the data humans provide. This dependence on data is the core of foundational AI. (Read more about AI hallucinations here)

The Activity: The Cat vs. Dog Challenge

For this activity, you need a teachable image classification model. You can use Google’s Teachable Machine, or, for a more integrated experience geared towards our curriculum, you can use the child-friendly classification tools provided within the inAI platform.

The goal is to train a simple model to distinguish between "Cats" and "Dogs."

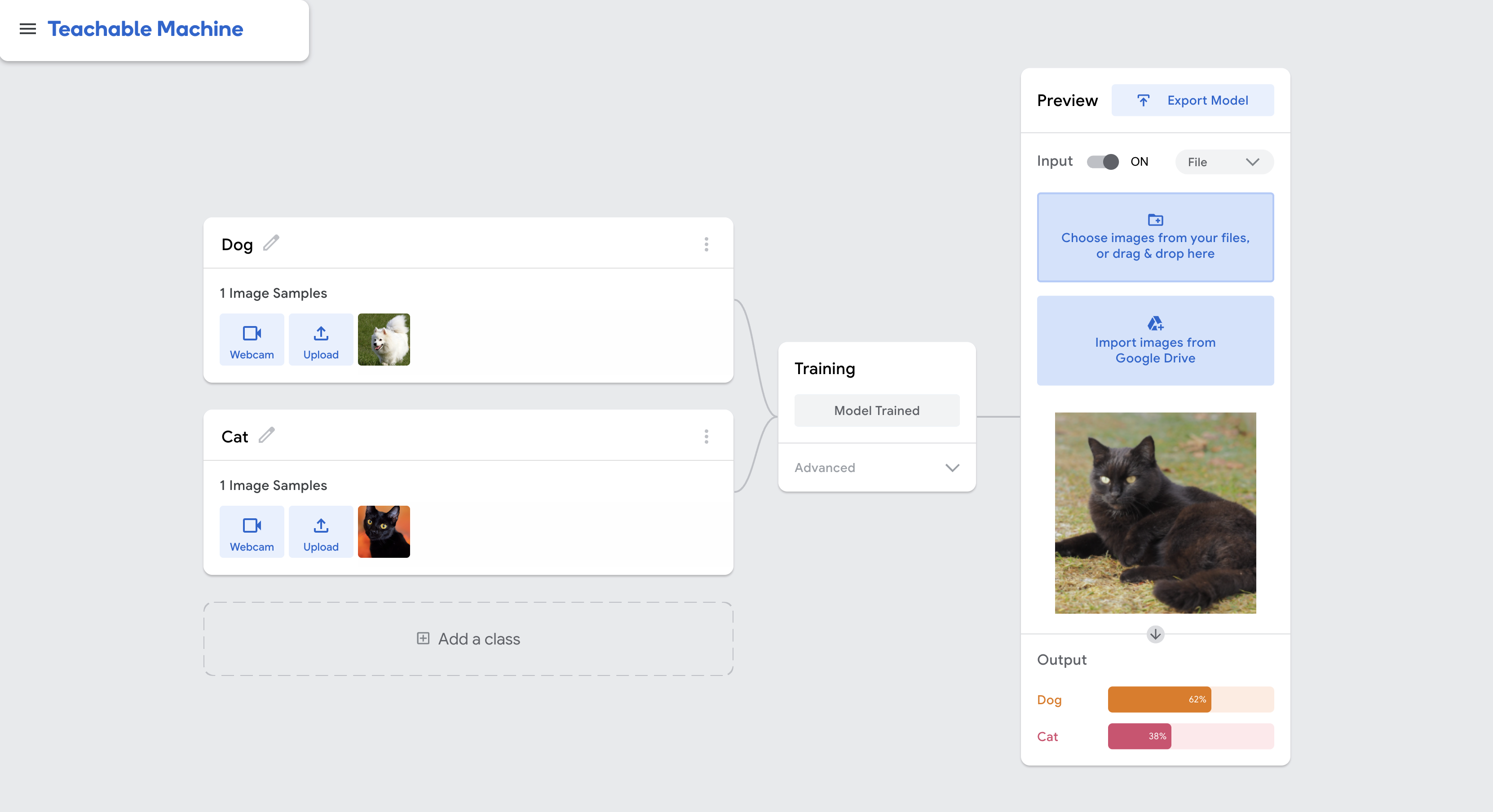

Phase 1: The "Data Starvation" Phase

Let’s start by showing students that AI isn't smart right out of the box.

- Open your image classifier tool. Create two classes: "Cat" and "Dog."

- The Setup: Ask the students to find images to upload. But here is the constraint: only upload one single image of a cat, and one single image of a dog.

- Train the model on just those two images.

- The Test: Now, show the AI a different picture of a dog (perhaps one that is a different color or size than the training image).

- The Result: The AI’s confidence score will likely be very low, or it might get it completely wrong.

The Teachable Moment: Ask the students why it failed. Guide them to the realization that the AI didn't have enough examples to learn what "makes a dog a dog." It just memorized that one specific picture.

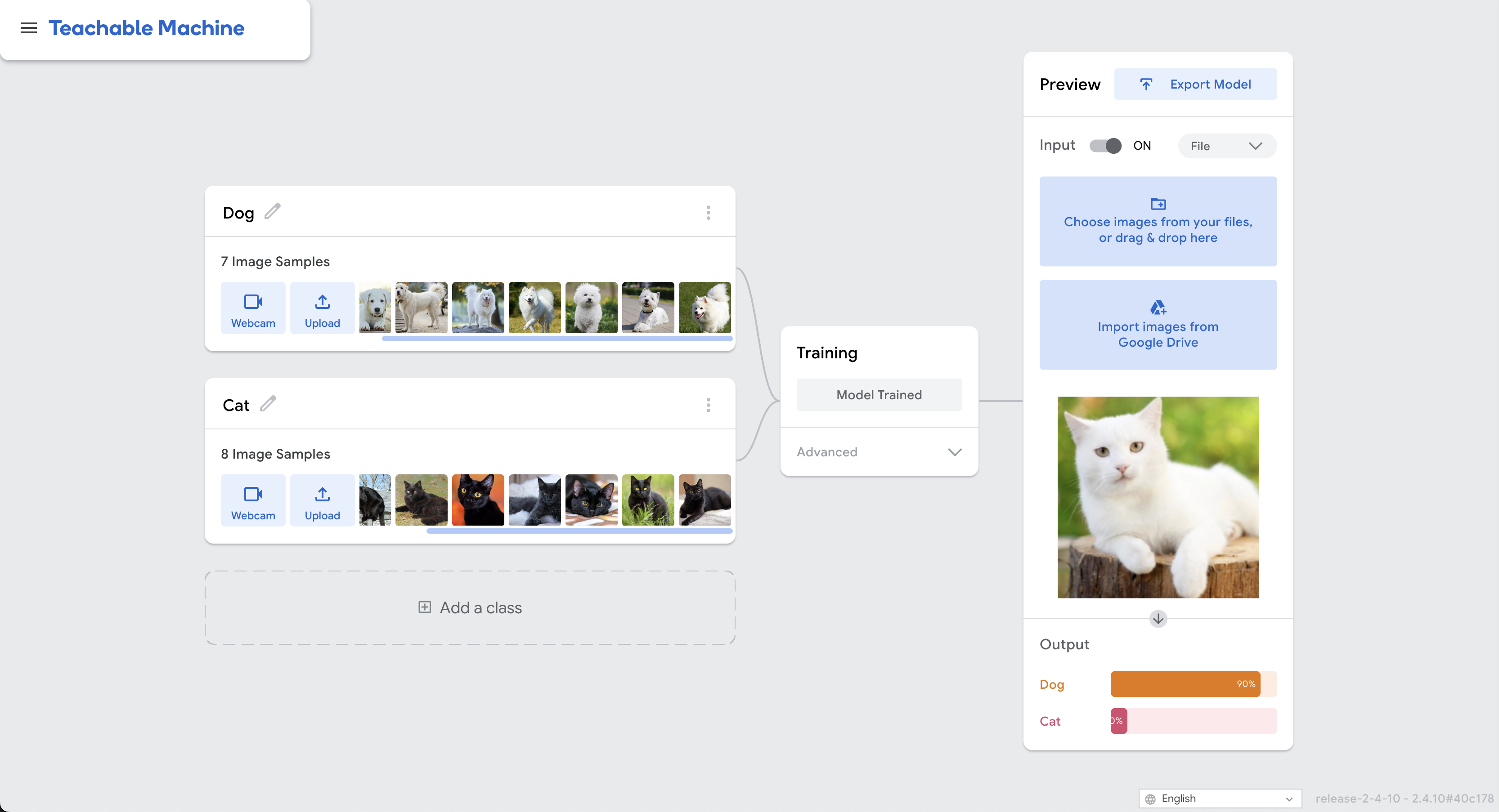

Phase 2: The "More is Better" Phase

Now, let’s improve the model.

- Have the students flood the model with data. Upload varied images of cats and dogs of all shapes, sizes, and colors.

- Train the model again.

- Test it with new images. The model should now be much more accurate, with high confidence scores.

The Teachable Moment: The AI got "smarter" not because we changed its code, but because we gave it better data. This reinforces the facilitator pillar of AI education—the human guide is essential to the process.

Phase 3: The Twist (Introducing Bias)

This is where the real learning happens. We are going to deliberately break the model to prove a point.

- Clear the previous data. Start fresh with your "Cat" and "Dog" classes.

- The Biased Setup: Tell the students you have a very specific set of rules for training this time:

- For the "Dog" class, you may only upload images of white dogs.

- For the "Cat" class, you may only upload images of black cats.

- Train the model on this highly skewed data.

-

The Test:

- Show the model a picture of a black dog.

- Ask the class: What do you think the AI will predict?

The Result: The AI will almost certainly classify the black dog as a "Cat."

Why? Because it didn't learn the features of a dog vs. a cat. It learned a simpler, flawed pattern based on the biased data: White things are dogs; black things are cats.

Bringing It Back to the Real World

The cat/dog activity is fun, but the implications are serious. Once the students see how easily the AI was tricked by color, connect it to real-world scenarios.

If an AI can be biased against black dogs because of bad data, what happens when we use AI for human tasks?

- Voice Recognition: Discuss how early voice assistants struggled to understand strong Indian accents. Why? Because they were largely trained on audio data from American or British speakers. The data lacked diversity, so the product failed a huge segment of the population.

- Hiring Algorithms: Explain how some companies tried using AI to scan resumes. If the AI was trained mostly on resumes of men who had previously held the job, the AI started rejecting resumes from women—simply because they didn't fit the historical "pattern" of the data.

The drawbacks and risks of a biased AI is plenty. It is the human agency that has the abilities to balance this bias. And it becomes the responsibilities of the educator to keep students informed about the bias that AI can hold.